Centre for Educational Research and Innovation - CERI

A Primer on Learning: A Brief Introduction from the Neurosciences

by

Núria Sebastián Gallés

Grup de Recerca de Neurociencia Cognitiva

Parc Científic. University of Barcelona

http://www.ub.es/pbasic/sppb/

from her paper delivered at the Social Brain Conference held in Barcelona, 17-20 July 2004

“SCENE I. A desert place.

Thunder and lightning. Enter three Witches.

First Witch

When shall we three meet again

In thunder, lightning, or in rain?

Second Witch

When the hurlyburly's done,

When the battle's lost and won.

Third Witch

That will be ere the set of sun.”

(Macbeth, Act 1, scene 1)

William Shakespeare chose the following words to start Macbeth: “A desert place. Thunder and lightning. Enter three Witches.” With just these nine words, he is able to describe a fearful scene. Human beings do not like to be in desert places, much less when there is a storm. Not at all if three witches enter the scene. Why is it that there are scary situations? Many children (and adults) panic in the dark. Others freeze in front of a snake. Fear is not a good companion of reason and happiness. But in many circumstances, it helps in making permanent memories: a traumatic situation is almost impossible to forget.

“The Gileadites captured the fords of the Jordan River opposite Ephraim. Whenever an Ephraimite fugitive said, "Let me cross over," the men of Gilead asked him, "Are you an Ephraimite?" If he said, "No," then they said to him, "Say 'Shibboleth.'" If he said, "Sibboleth," and could not pronounce the word correctly, they grabbed him and executed him right there at the fords of the Jordan. On that day forty-two thousand Ephraimites fell dead.” (Judges 12)

We are told that forty-two thousand Ephraimites were killed because they could not pronounce the word “Shibboleth.” Put yourself in the shoes of an Ephraimite for a moment. You see that all your fellows are killed because their failure to say “sh,” and you hear the Gileadites saying “Say ‘Shibboleth.’” In spite of facing the possibility of losing your life and the examples provided by your enemy, you cannot learn to pronounce “sh.”

These two examples show two of the most extreme situations in learning. The first one refers to circumstances that, even though experienced only once in your life, leave permanent memories. It is not unusual for traumatic experiences to create this type of memories. The second case refers to a situation where, in spite of multiple instances and a high motivation to learn, it is impossible to modify our behavior. Learning a second language is just one case of this category.

What is learning? How do we learn?

Multiple definitions of learning

As the reader might have guessed from the above quotation, it is a very difficult task to define what learning is. From a biochemical perspective, it could be said that learning is what happens when some molecules are modified. At a more global level, it can also be described as the increase in association between two events. The term “association” has been traditionally linked to the concept of “learning.” Indeed, in 1949 one of the fathers of computational neurobiology, Donald Hebb, postulated a computational rule, known as the “Hebbian rule,” which makes explicit this assumption. This rule postulates that learning implies coincident pre- and post-synaptic activity. Although many of Hebb’s ideas were not right, recent research on neurobiology has shown that this coincidence of activities causes synapses to change, and therefore, they constitute the very basic mechanism of learning. But, can learning be defined just by making reference to biochemical changes?

One of the main problems in defining learning has to do with the more general issue of relating “mind” and “brain.” There is a long tradition in Western culture of separating these two levels. We can travel back to Greek philosophers, Descartes and even contemporary philosophers. One particular attempt at relating brain and behavior was phrenology. During the late 19th century, there was a movement that tried to locate each cognitive function, and also personality traits, at precise brain locations. This approach was wrong, but it helped society to accept the notion that cognitive functions could be related to particular brain structures. Modern neuroscience has provided an appropriate background to address these issues. We now know that our cognitive functioning is very complex. It would be impossible to present any comprehensive account of it that only considers one level of description. Problems must be given the right level of explanation, but what is the proper level to explain “learning”?

Learning at a molecular level

What is the relationship between synaptic changes and behavioral changes? We are far from being able to answer to this question, but, as in other fields of neuroscience, we are getting closer and closer to it. In 1973, Timothy Bliss and Terje Lomo described for the first time in the mammalian brain the existence of LTP: Long-Term Potentiation. This was considered a great finding. Why was this discovery so important?

The basic unit (cell) of our nervous system is the neuron. Neurons can have many different shapes, some of them really spectacular (see the cerebellar Purkinge cell in the above figure). Although we can think of our nervous system as a road network, where electric impulses (or information) travels, it has one particular property that makes it radically different from most networks we are used to thinking of. Ramón y Cajal was the first to describe our nervous system as a discontinuous network of neurons: neurons would not be “touching” each other. Instead, they would stand next to each other, with gaps between them. For the nervous signal to travel across the system, it needs to “jump” over those gaps. The way this is done is through synapses (see figure). When the nervous signal reaches one end of a neuron (the end of an axon), it causes some substances (neurotransmitters) to be released into the gap.

These neurotransmitters induce some chemical reaction on the end of the adjacent neuron (a dendrite). This chemical reaction triggers the transmission of the nervous signal in the second neuron, so the signal continues to travel through our brain. The fact that our brain is not a “solid,” continuous network, but rather that it needs biochemical changes at crucial points to have the signal traveling across it, is the foundation of learning.

Different factors (like drug or alcohol consumption) can modify these biochemical reactions, but the repetition of the transmission also modifies their properties. In a sense, we can think of synapses as “living” connections; they will evolve and adapt themselves as a function of their past experiences. That is, our brain is a “plastic” structure. In this context, plastic refers to its ability to change its functioning as a consequence of past experiences. Our brain is “plastic” because the activity at the synapses changes. Now we have a hint about why the discovery of LTP in the mammalian brain was so important.

As has just been said, one current view of learning at a molecular level is that it has to do with molecular changes occurring at the synapses. One of the best candidates for this molecular change has been named Long-Term Potentiation. Long-Term Potentiation (LTP) is operationally defined as a long-lasting increase in synaptic efficacy which follows high-frequency stimulation of afferent fibers: this form of synaptic plasticity may participate in information storage in several brain regions.

We are far from understanding all the complex mechanisms involved in these processes. It is clear, even from personal experiences, that our brain operates better when our basic needs are satisfied (sleep, hunger, thirst). Fear has strong consequences (at a molecular level) in the way learning occurs. It has the consequence of modifying the biochemical properties of the neurotransmitters, making some experiences particularly salient (this is the basis of traumatic experiences that individuals are unable to forget throughout their entire lives).

Up to now we have been talking about learning as if there were just one type of it. But this is not the case. Indeed, it would be inaccurate to assume that our brain is a homogeneous structure, and that all of its parts have equivalent roles in learning. True, learning happens throughout our brain, but it would be erroneous to assume that there are no differences in learning how to play tennis, how to get around in a new building and how to speak a new language. Our mature brain is a highly specialized “device” and different types of knowledge and “activities” are carried out in different parts of it. So, in order to better understand how learning takes place, it will be necessary to consider not only “what” biochemical changes are taking place, but also “where” they are happening.

Is learning more than changes in synaptic strength?

Learning and brain systems

If learning is broadly defined as gathering knowledge, it bears a strong relationship with memory, as it refers to our capacity to store knowledge. Research in Cognitive Psychology showed that there are different types of “knowledge.” Neuroscience has confirmed and extended these findings by providing evidence of how different memory types are supported by different brain systems. We can have an initial understanding of the diversity of memory types and learning and storing mechanisms by considering the case of amnesic patients. It is well known that under some circumstances, one’s past life can be forgotten. This topic has been used by writers and movie makers: stories about people who, after suffering some traumatic experience, have forgotten “everything.” But, is it true that they have forgotten all of their memories? Amnesic patients usually have trouble remembering their names, where they live, work, where they went on vacation last summer, etc. Usually they forget information about their personal life. In fact, they may suffer a highly selective memory deficit by forgetting what happened during a particular traumatic experience, but without experiencing any “learning” problem.

Neuroscientists have proposed a fundamental division between two memory types: declarative memory and procedural memory. Broadly speaking, “declarative memory” corresponds to what cognitive psychologists call “explicit learning.” Explicit learning is usually related to our ability to put into words something that we are learning—for instance, when describing the set of instructions that have been followed to acquire new data (e.g. how to cook a new dish). Many amnesic patients can be taught some skills, but they fail to explicitly state the sequence by which they managed to do so. At an anatomical level, declarative (explicit) memory involves the hippocampus and several surrounding cortical areas and also other different cortical areas.

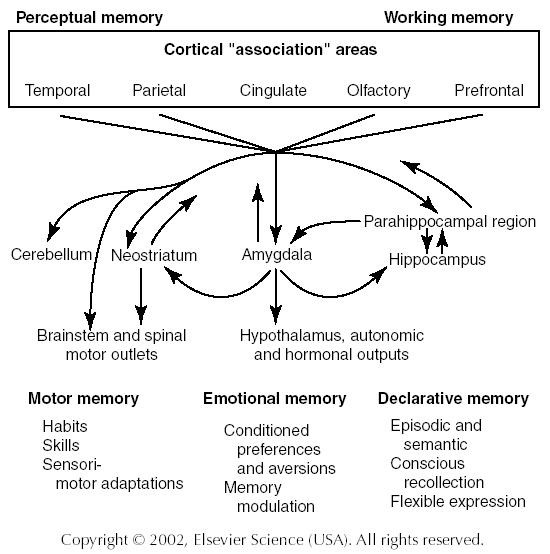

If declarative memory corresponds to explicit learning, procedural memory corresponds to implicit learning. Implicit knowledge is involved in many different aspects of our lives. We have an implicit knowledge of the grammar of our language that makes us to reject a sentence like “the boy open the window” because “it is not correct.” Different studies have shown that implicit learning is involved even in classification tasks, which may at first be considered an example of declarative knowledge: participants can sort items into two categories, without being able to tell what criteria had been used. Implicit learning is also involved in slight changes in motor skills that accompany, for instance, our improvements when learning a new sport. From the point of view of brain structures being involved in this type of learning, there are two subsystems: the neostriatal subsystem (belonging to the basal ganglia) and the cerebellar subsystem. Although both subsystems subserve different functions, they share the property of being essential in the processes of learning without conscious recollection. The following table illustrates the relationship between brain areas and memory systems (from Squire et al. p. 1303). In this diagram, a third memory system has been added: emotional memory.

Emotional memory is the learning and recollection of knowledge about our emotional state in a specific circumstance. The scene described by Shakespeare in the first words of Macbeth causes us to contact different fearful experiences that left very strong memories in our brain. The consequences of emotional states in learning have been well documented in the past years. One central structure in emotions is the amygdala. It is a small structure, located in the inner part of the brain, which resembles an almond (this is where the name comes from). The amygdala, as can be seen in the diagram, receives inputs from many sensory and secondary centers and is responsible for increasing our heart rate, blood pressure and, together with the hypothalamus, controlling a wide variety of hormones. The amygdala-hypothalamus complex can be seen as a powerful “drug-triggering” center in our brain. As mentioned in the preceding section, changes in the neurotransmitters may have important consequences in the learning processes. These effects can involve increasing the efficiency of storage, as in the case of the increase of arousal induced by emotional changes (McGaugh 2000). In general, it should be considered that the strength of a memory (learning) should correlate with its importance for the organism. The consequences of emotions can be viewed as a successful evolutionary mechanism for learning and remembering the important things of our lives (for a review of these topics, see Helmuth, 2003).

Research in cognitive neuroscience has shown that individuals suffering post-traumatic stress disorder (a highly disabling condition, associated with intrusive recollections of a traumatic event, avoidance of situations associated with the trauma, and psychological numbing) show abnormal functioning of the amygdala, hypothalamus and the medial frontal cortex. These studies have shown that the amygdala “overreacts” to stimuli associated with the traumatic experience, and the hypothalamus and medial frontal cortex seem unable to compensate for this.

Although all human beings may suffer a traumatic experience that will not be forgotten in their entire lives, it is undeniable that infancy and childhood are particularly sensitive periods for learning.

Learning at different moments in life

Up to this point we have been presenting evidence about learning considering that the brain is a relatively mature, stable structure. In this context, the changes occurring at the molecular level would not represent major transformations in its structure and overall functioning. However, as living entities in general, and as humans in particular, we are characterized by a very long period of growth. It is undisputable that not all kinds of knowledge can be learned in the same way in different moments in life. One of the most frustrating experiences as an adult learner is that of trying to acquire a new language—even more so if we compare ourselves with infants and young children, who are able to acquire a new language without apparent effort. Remember the example given at the beginning of this text of the Ephraimites and their inability to pronounce the sound “sh.” But not all learning is more difficult as adults than as children. In fact, both experience and our mature brain help us in most of the situations. It is clearly easier to learn to play chess at age 20 than at age 3. Why is it that for some types of knowledge (like learning a new language) having a “young brain” seems to be an advantage, while not for others? Many researchers have related the answer to this question to the existence of “critical periods” in brain development. Because this is a very controversial issue, it will be briefly addressed here.

One general property of the developing brain is that just before and after birth, there is an exuberant growth of neurons and synapses (although the precise timing depends on the particular area of the brain in question). In fact, the number of neurons and synapses is much larger in this period than in the adult highly functioning brain. In this phase there is a “crude” competition for both neurons and synapses to “survive,” and only a subset of them (those that are better suited for the intended processing) will manage to do it. The adjacent figure shows the elimination of two neuromuscular junctions (at days 7, 8 and 9 in a transgenic mouse). At day 7, cell NMJ1 is innervated by two axons, at day 9, only one axon innervates this cell. It could be said that the leftmost axon innervating NMJ1 “won the battle.”

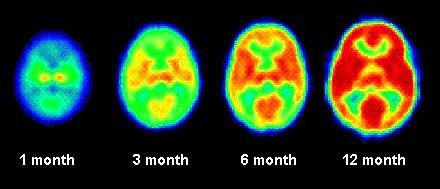

The great advantage of this cell death and axon “pruning” is that our brain increases its efficiency. It is totally incorrect to believe that “more neurons” or “more synapses” is better than having less. The purpose of synaptic overproduction may be to capture and incorporate experience into the developing synaptic architecture of the brain. The next figure shows the evolution of the mammalian visual system, and it clearly shows the improvement in functioning produced by axon pruning (and the consequent reorganization of neuronal projections). In the immature brain, retinal projections are unspecified, while in the adult brain a highly ordered and structured organization is seen. Often, this specialization is the outcome of both internally driven information (“innate” specifications) and externally driven activity (the influence of experience). It can be said that during this neuronal reorganization our brain is highly plastic, in the sense that relatively small amounts of experience have a great impact.

However, it would be wrong to believe that the entire brain develops in a homogeneous fashion. In fact, these periods of cell death and synapse pruning vary significantly from one area of the brain to another. For example, at around age 5 or 6 the number of synapses in the visual and auditory areas of the brain resembles those of the adult. It is not until mid to late adolescence that the number of synapses in the areas of the brain that subserve higher cognitive functions and emotions (prefrontal cortex) approaches adult patterns.

The idea of a “critical period” is that, because of biological constraints—related

to cell death and synapse pruning among, other things—our brain would be particularly fit to learn certain things during a relatively short period of time. Experience before or, more importantly, after this period would be less efficient, or even completely inefficient. Although nobody denies the existence of critical periods in development, the way they are usually presented is basically wrong.

As we have mentioned several times, our brain is a highly specialized machine. We have seen that the hypocampus is involved in learning processes that make use of declarative memory. We have also seen that the amygdala is involved in emotional processing. In the same way, the different cognitive processes involve different brain systems. For instance, complex reasoning and planning particularly involve frontal structures. As we have just said, these structures do not reach adult levels until almost adulthood. So it would be difficult to think of the existence of a “critical period” for learning cognitive abilities that rely heavily on these structures. However, there are a few “sensitive periods” (to use a more modern term) in human development, most of which are related to very basic functioning (like the reorganization of the visual cortex presented above) and, importantly, proper functioning does not depend on having “more” experience. In the past years, this notion has received a lot of attention, and it is quite common to find CDs of music “to stimulate your baby’s brain.” There is no scientific evidence that over-stimulating a normal, healthy infant has any beneficial effect. On the contrary, there is evidence that it may be a waste of time.

Newsweek, February 1996: A conclusion by the writer of the article, following summary statements from neurologists: "Children whose neural circuits are not stimulated before kindergarten are never going to be what they could have been." In this article (2-19-96), the "window of opportunity" is also discussed, with a graph that shows how most all abilities have their critical periods beginning right after birth.

In a recent article, Patricia Kuhl and coworkers (2003) explored the plasticity of human infants to acquire foreign sounds. Research in infant speech perception has shown that, at birth, infants can distinguish all speech sounds, even if they are not produced in their environment and if as adults they will be unable to do so. For instance, Spanish adults have lots of trouble perceiving the Catalan contrast /e-/, but at four months, Spanish babies can easily discriminate the two sounds (Bosch & Sebastián-Gallés, 2003). Parallel results have been reported comparing infant and adult data with a wide variety of foreign contrasts (like the /r-l/ contrast, which Japanese adults have great difficulty perceiving. However, during the first months of their lives, Japanese infants have no trouble telling them apart). This ability to perceive foreign contrasts declines between the ages of six and twelve months. That is, in the first year of their lives, infants modify their brains to start to become highly competent listeners and speakers of their maternal language. So, the acquisition of the speech sound repertoire of the maternal language is characterized not by adding new sounds, but by “forgetting” those that are not used (it is easy to see the parallels of this process with the cell death and axon pruning described above).

One question that has been asked is to what extent this “forgetting” is reversible. Popular wisdom assumes that acquiring a second language before puberty usually leads to a native-level performance (although scientific research has proven that this is not the case. When sensitive measures are used, significant differences can be found in some linguistic domains between natives and bilinguals who acquired their second language during the first three years of their lives). Kuhl and co-workers have analyzed the effect of exposing 9-month-old American infants to Mandarin Chinese. They tested them with a Mandarin Chinese contrast that adult American listeners have trouble perceiving. Infants were exposed to twelve 25-minute sessions (the total exposure period lasted about four weeks). The results showed that at this early age it is possible to reverse the decline. That is, when compared with a control group that was not exposed to Mandarin Chinese, the infants who were exposed to Chinese better discriminated the Chinese contrast. In this experiment, infants were exposed to “life” materials. That is, there were native speakers of Mandarin talking and playing with the infants. In an additional experiment, two new groups of American infants were exposed to recorded materials, fully equivalent to the “life” ones, either with audiovisual exposure or with just audio exposure. The results showed very clearly that these two groups did not benefit at all from being exposed to Mandarin Chinese: their discrimination of the Chinese sounds was exactly the same than that of the American infants who were not exposed to Chinese. What does a live person provide that a recording does not? Although a definitive answer cannot be provided, some proposals may be advanced. We have seen that brain systems responsible for emotions play a significant role in learning. During the first months of our lives there are important “rewiring” and “reconfiguration” processes that affect the way these structures connect with other brain areas (in particular with the control of attention). Accordingly, it would be reasonable to assume that emotions may play a more significant role in learning in infancy and early childhood than in adulthood, and it is clear that interacting with a human being is more likely than a DVD to create emotions in a nine-month old.

Researchers, the ivory tower and real life

In the preceding pages, we have seen that significant advances in our comprehension of learning processes have been made and that it is quite likely that these advances will increase even more in coming years. Significant amounts of money are allocated to continue these studies, but will it be enough?

It is important not only to advance our knowledge in the basic mechanisms of learning, but also to advance our knowledge of how our understanding can be used by society. Neuroscience is in its infancy, and it is not easy to build bridges from theory to practice. Usually, “brain-based” applications are received with skepticism by educators and practitioners. True, many of the commercially available programs that claim a “scientific” basis have not been validated. Like the example of the putative benefits of early stimulation mentioned earlier, there are many “neuromyths,” and they have a tremendous negative impact on how science is perceived by society.

To solve both the problem of building bridges between science and society and to guide the non-specialist, the different administrations need to become involved. Fortunately, there seem to be some steps in that direction. On one hand, the US National Science Foundation has launched the program “Science of Learning Centers” (http://www.nsf.gov/home/crssprgm/slc/). Realizing the need for interdisciplinary approaches, these centers are designed to put together all disciplines that relate to this topic, ranging from psychologists and biologists to mathematicians, anthropologists and educators. A second initiative that deserves to be mentioned is the OECD “Brain and Learning” project. According to their home page :

Over the past few years, science has made substantial advances in understanding the brain. Consequently, the challenge facing us today, is to explore the relevant research in order to develop a pathway towards a neuroscientific approach to the question ‘how do we learn?’ Our objective is to formulate a sounder basis for the understanding (and, over time, an improvement) of learning and teaching processes and practices, notably in the areas of reading, mathematics and lifelong learning.

These are very ambitious initiatives, but understanding what learning is and how it is affected at all levels is a huge challenge. It undoubtedly needs to be addressed by large-scale teams and programs.

References

Because of the introductory-tutorial approach of this paper, a short list of general references is provided. The motivated reader may consult these works to have both broader and deeper information of the topics here addressed.

Bosch, L. & Sebastián-Gallé, N. (2003). Simultaneous bilingualism and the perception of a language specific vowel contrast in the first year of life. Language and Speech, 46, 217-244.

Bruer, J.T. (1999). The Myth of the First Three Years: A New Understanding of Early Brain Development and Lifelong Learning. Free Press [This book has been translated into Spanish. Ed. Paidós: El

Gazzaniga, M.S. (2002). The New Cognitive Neurosciences.

Helmuth, L. (2003). “Fear and Trembling in the Amygdala.” Science, 300, 568-569.

Kandel, E.R., Schwartz, J.H. and Jessell, T.M. (1999) Principles of Neural Science. Appleton and Lange [This work has been translated into many different languages].

Kuhl, P., Tsao, F.M. & Liu, H.M. (2003) “Foreign-Language Experience in Infancy: Effects of Short-Term Exposure and Social Interaction on Phonetic Learning.” Proceedings of the

OECD (2002). Understanding the Brain: Towards a New Learning Science. OECD Publication [this work has been translated into several languages].

Squire, L.R., Bloom, F.E. et al. (2003). Fundamental Neuroscience.

Related Documents